This article originally appeared on our sister site datacenterdynamics.com. Read more from DCD.

Nvidia has opened its NVLink interconnect technology up to external companies, with the launch of NVLink Fusion.

Unveiled this week at Computex in Taiwan, the new offering allows customers to build semi-custom AI infrastructure that links non-Nvidia accelerators with Nvidia hardware in rack-scale solutions.

Cloud providers will also be able to use NVLink Fusion to scale up AI factories to “millions of GPUs,” using any ASIC in combination with Nvidia’s rack-scale systems and networking platform.

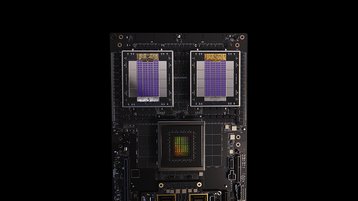

Nvidia’s NVLink provides high-performance interconnect technology that sits between the GPU and CPU, enabling much faster data exchange.

The NVLink platform, which is currently in its fifth generation, provides 800Gbps of throughput and features ConnectX-8 SuperNICs, Spectrum-X, and Quantum-X800 InfiniBand switches, with support for co-packaged optics to be made available soon. It can also provide a total bandwidth of 1.8Tbps per GPU, 14x faster than PCIe Gen5, Nvidia said.

Nvidia has already partnered with a number of customers for the launch of NVLink Fusion, naming MediaTek, Marvell, Alchip Technologies, Astera Labs, Synopsys, and Cadence as the first to adopt the technology for “model training and agentic AI inference.” Additionally, it was announced that Fujitsu and Qualcomm will be the first to integrate NVLink Fusion into their CPUs.

“A tectonic shift is underway: for the first time in decades, data centers must be fundamentally rearchitected — AI is being fused into every computing platform,” said Jensen Huang, founder and CEO of Nvidia. “NVLink Fusion opens Nvidia’s AI platform and rich ecosystem for partners to build specialized AI infrastructures.”

First announced in March 2014, traditionally, Nvidia has kept its NVLink interconnect technology to itself, leading to the establishment of the UALink Consortium in May 2024. The group bills itself as an alternative to Nvidia’s NVLink offering and says it aims to define and establish an open industry standard that enables AI accelerators to communicate more effectively and efficiently.

Its first GPU interconnect specification was released in April 2025, providing 200Gbps throughput per lane and linking up to 1,024 accelerators per pod.

NVLink Fusion silicon design services and solutions are available now from MediaTek, Marvell, Alchip, Astera Labs, Synopsys, and Cadence.